When OpenPose is built, openpose.bin or openpose.exe is automatically created. This program provides most of OpenPose's features.

Therefore, if you know how to use this program well, you can implement OpenPose functions in conjunction with this program in Python or C/C++.

Detailed usage of openpose.bin can be found in OpenPose Doc-Demo.

Prerequisites

Install OpenPose 1.7.0.

Sample Image and video files

Image and video files to be used for testing exist in the openpose-1.7.0/examples/media directory. These files are automatically created when building OpenPose.

root@spypiggy-nx:/usr/local/src/openpose-1.7.0/examples/media# ls -al total 4032 drwxrwxr-x 2 root root 4096 11월 17 14:48 . drwxrwxr-x 11 root root 4096 11월 17 14:48 .. -rwxrwxr-x 1 root root 230425 11월 17 14:48 COCO_val2014_000000000192.jpg -rwxrwxr-x 1 root root 107714 11월 17 14:48 COCO_val2014_000000000241.jpg -rwxrwxr-x 1 root root 208764 11월 17 14:48 COCO_val2014_000000000257.jpg -rwxrwxr-x 1 root root 75635 11월 17 14:48 COCO_val2014_000000000294.jpg -rwxrwxr-x 1 root root 159712 11월 17 14:48 COCO_val2014_000000000328.jpg -rwxrwxr-x 1 root root 81713 11월 17 14:48 COCO_val2014_000000000338.jpg -rwxrwxr-x 1 root root 131457 11월 17 14:48 COCO_val2014_000000000357.jpg -rwxrwxr-x 1 root root 118630 11월 17 14:48 COCO_val2014_000000000360.jpg -rwxrwxr-x 1 root root 246526 11월 17 14:48 COCO_val2014_000000000395.jpg -rwxrwxr-x 1 root root 118150 11월 17 14:48 COCO_val2014_000000000415.jpg -rwxrwxr-x 1 root root 102365 11월 17 14:48 COCO_val2014_000000000428.jpg -rwxrwxr-x 1 root root 194898 11월 17 14:48 COCO_val2014_000000000459.jpg -rwxrwxr-x 1 root root 131485 11월 17 14:48 COCO_val2014_000000000474.jpg -rwxrwxr-x 1 root root 105570 11월 17 14:48 COCO_val2014_000000000488.jpg -rwxrwxr-x 1 root root 22611 11월 17 14:48 COCO_val2014_000000000536.jpg -rwxrwxr-x 1 root root 188065 11월 17 14:48 COCO_val2014_000000000544.jpg -rwxrwxr-x 1 root root 130509 11월 17 14:48 COCO_val2014_000000000564.jpg -rwxrwxr-x 1 root root 129689 11월 17 14:48 COCO_val2014_000000000569.jpg -rwxrwxr-x 1 root root 96802 11월 17 14:48 COCO_val2014_000000000589.jpg -rwxrwxr-x 1 root root 112452 11월 17 14:48 COCO_val2014_000000000623.jpg -rw-rw-r-- 1 root root 1395096 11월 17 14:48 video.avi

OpenPose models

During the build process using cmake, we have already downloaded several models.

With the 3 models we downloaded, most of the work is possible.

| Model Name | Description | Installed |

|---|---|---|

| BODY_25_MODEL | Openpose's basic model for body keypoint detection | Y |

| BODY_COCO_MODEL | Microsoft's COCO(Common Objects in Context) model for keypoint detection. Refer to "JetsonNano-Human Pose estimation using tensorflow" for a description of the key points used in this model. | N |

| BODY_MPI_MODEL | mpii (max planck institut informatik) model for keypoint detection. Refer to "Jetson Xavier NX - Human Pose estimation using tensorflow (mpii)" for a description of the key points used in this model. | N |

| BODY_FACE_MODEL | Openpose's basic model for face keypoint detection | Y |

| BODY_HAND_MODEL | Openpose's basic model for hand keypoint detection | Y |

Model files exist in the openpose-1.7.0/models directory as follows.

root@spypiggy-nx:/usr/local/src/openpose-1.7.0/models# ls -al hand/ pose/ face/ face/: total 150816 drwxrwxr-x 2 root root 4096 2월 13 20:54 . drwxrwxr-x 6 root root 4096 11월 17 14:48 .. -rw-rw-r-- 1 root root 676709 11월 17 14:48 haarcascade_frontalface_alt.xml -rw-rw-r-- 1 root root 25962 11월 17 14:48 pose_deploy.prototxt -rw-r--r-- 1 root root 153717332 2월 13 20:54 pose_iter_116000.caffemodel hand/: total 143928 drwxrwxr-x 2 root root 4096 2월 13 20:54 . drwxrwxr-x 6 root root 4096 11월 17 14:48 .. -rwxrwxr-x 1 root root 26452 11월 17 14:48 pose_deploy.prototxt -rw-r--r-- 1 root root 147344024 2월 13 20:55 pose_iter_102000.caffemodel pose/: total 20 drwxrwxr-x 5 root root 4096 11월 17 14:48 . drwxrwxr-x 6 root root 4096 11월 17 14:48 .. drwxrwxr-x 2 root root 4096 2월 13 20:54 body_25 drwxrwxr-x 2 root root 4096 11월 17 14:48 coco drwxrwxr-x 2 root root 4096 11월 17 14:48 mpi

Body Keypoints

The location of the coordinates used in the most commonly used BODY-25-MODEL is as follows.

Whenever in doubt, always use the --help option. This option describes in detail all the features openpose.bin provides. Among the many options, important options that are frequently used are as follows. Options not described here can also be checked using the --help option.

keypoint detection using openpose.bin

Body Keypoint detection

Let's use openpose.bin to detect keypoints in the image file. Make the /usr/local/src/output directory in advance.

./build/examples/openpose/openpose.bin --image_dir ./examples/media --write_images /usr/local/src/output --write_json /usr/local/src/output --net_resolution "320x224"Starting OpenPose demo...

Configuring OpenPose...

Starting thread(s)...

Auto-detecting all available GPUs... Detected 1 GPU(s), using 1 of them starting at GPU 0.

OpenPose demo successfully finished. Total time: 10.629069 seconds.Perhaps the following files were created in the /usr/local/src/output directory.

| option | Description |

|---|---|

| image_dir | The directory path where OpenPose will work. Save image files to work in this directory. |

| write_dir | The path to the directory where OpenPose's job results are stored. |

| write_json | Directory to write OpenPose output in JSON format. It includes body, hand, and face pose keypoints (2-D and 3-D), as well as pose candidates |

| net_resolution | Multiples of 16. If it is increased, the accuracy potentially increases. If it is decreased, the speed increases. For maximum speed-accuracy balance, it should keep the closest aspect ratio possible to the images or videos to be processed. Using `-1` in any of the dimensions, OP will choose the optimal aspect ratio depending on the user's input value. Jetson Nano, Xavier NX does not have enough memory. Therefore, it is recommended to use the appropriate value. If this value is not specified, the process may be forcibly terminated in the Jetson series. |

If you are a programmer you are more interested in json files than output images. The json file stores keypoint information detected by OpenPose.

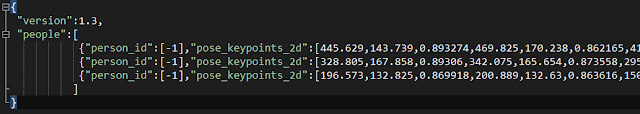

The following is a comparison between the output image and the json file. It can be seen that the keypoint information of the three people detected in the image is included in the json file. The way to read the json file is to divide it by 3 numbers. The first two are coordinates, and the last is accuracy.

Since we are marking 25 body keypoints, all 25 X 3 = 75 numbers will be displayed per person. If the keypoint is not detected, all 3 numbers are displayed as 0.

Body, Hand, Face Keypoint detection

Key points of the hand face can be easily added with the --face and --hand options.

./build/examples/openpose/openpose.bin --image_dir /usr/local/src/image \

--write_images /usr/local/src/output \

--write_json /usr/local/src/output \

--net_resolution "368x320" \--hand \

--hand_net_resolution "256x256" \--face \

--face_net_resolution "256x256"

Starting OpenPose demo...

Configuring OpenPose...

Starting thread(s)...

Auto-detecting all available GPUs... Detected 1 GPU(s), using 1 of them starting at GPU 0.

OpenPose demo successfully finished. Total time: 15.909267 seconds.Perhaps the following files were created in the /usr/local/src/output directory.

| option | Description |

|---|---|

| hand | Whether the hand keypoint is detected |

| hand_net_resolution | Multiples of 16 and squared. Analogous to `net_resolution` but applied to the hand keypoint detector. This value must be squared rectangle. Jetson Nano, Xavier NX does not have enough memory. Therefore, it is recommended to use the appropriate value. If this value is not specified, the process may be forcibly terminated in the Jetson series. |

| face | Whether the face keypoint is detected |

| face_net_resolution | Multiples of 16 and squared. Analogous to `net_resolution` but applied to the face keypoint detector. This value must be squared rectangle. Jetson Nano, Xavier NX does not have enough memory. Therefore, it is recommended to use the appropriate value. If this value is not specified, the process may be forcibly terminated in the Jetson series. |

If you use default hand_detector, each hand consists of 21 key points. Like body, three values are one key point information, and it consists of two coordinates and one probability value.

If you use default face_detector, the face consists of 70 key points. Like body, three values are one key point information, and it consists of two coordinates and one probability value. The 70 keypoint is the same as the 68 keypoint used in many face recognition such as dlib. However, only two coordinates of the iris part of both eyes were added.

Detecting keypoints in video files

You can detect keypoints in video files by specifying the path to the video file using --video instead of --image_dir.

./build/examples/openpose/openpose.bin \ --video ./examples/media/video.avi \ --net_resolution "256x128" \ --write_json /usr/local/src/output \ --write_video /usr/local/src/output/result.avi

Starting OpenPose demo...

Configuring OpenPose...

Starting thread(s)...

Auto-detecting all available GPUs... Detected 1 GPU(s), using 1 of them starting at GPU 0.

OpenPose demo successfully finished. Total time: 65.039817 seconds.

This command saves the output as a result.avi video file. And it saves the keypoint information extracted from every frame as a json file. The sample video file consists of 205 frames. Therefore, 205 json files are created.

root@spypiggy-nx:/usr/local/src/output# ls -l total 17088 -rw-r--r-- 1 root root 16582436 2월 14 20:38 result.avi -rw-r--r-- 1 root root 2996 2월 14 20:37 video_000000000000_keypoints.json -rw-r--r-- 1 root root 2379 2월 14 20:37 video_000000000001_keypoints.json -rw-r--r-- 1 root root 3053 2월 14 20:37 video_000000000002_keypoints.json -rw-r--r-- 1 root root 3259 2월 14 20:37 video_000000000003_keypoints.json

......

......

-rw-r--r-- 1 root root 4093 2월 14 20:38 video_000000000202_keypoints.json -rw-r--r-- 1 root root 4174 2월 14 20:38 video_000000000203_keypoints.json -rw-r--r-- 1 root root 4197 2월 14 20:38 video_000000000204_keypoints.json

However, if you use openpose.bin to process video files or camera frames, it is very difficult to process in real time because the analysis result for the video frame is output as a file.

Useful options

| option | Description |

|---|---|

| logging_level | Integer in the range [0, 255]. 0 will output any opLog() message, while 255 will not output any. Current OpenPose library messages are in the range 0-4: 1 for low priority messages and 4 for important ones.) type: int32 default: 3 |

| display | Display mode: -1 for automatic selection; 0 for no display (useful if there is no X server and/or to slightly speed up the processing if visual output is not required); 2 for 2-D display; 3 for 3-D display (if `--3d` enabled); and 1 for both 2-D and 3-D display.) type: int32 default: -1 If you use -1, It can achieve a performance improvement of about 30%. |

| render_pose | if you are not using --write_video or --write_images, you can speed up the processing using -render_pose 0 |

Wrapping up

The openpose.bin is made to perform various functions through options. Therefore, if you are familiar with how to use this program, you can implement OpenPose through control using a familiar programming language.

Controlling openpose.bin using Python

The following simple Python code is the execution of the following command using Python.

./build/examples/openpose/openpose.bin --image_dir /usr/local/src/image --write_images /usr/local/src/output --write_json /usr/local/src/output --net_resolution "320x224"

import subprocess, os, sys, time exec = '/usr/local/src/openpose-1.7.0/build/examples/openpose/openpose.bin' working_dir = '/usr/local/src/openpose-1.7.0/' image_dir_info = "/usr/local/src/image" write_images_info = "/usr/local/src/output" write_json_info = "/usr/local/src/output" net_resolution_info = "320x224" params = [] params.append(exec) params.append("--image_dir") params.append(image_dir_info) params.append("--write_images") params.append(write_images_info) params.append("--write_json") params.append(write_json_info) params.append("--net_resolution") params.append(net_resolution_info) #no display will speed up the processing time. params.append("--display") params.append("0") #chdir is necessary. If not set, searching model path may fail os.chdir(working_dir) s = time.time() process = subprocess.Popen(params, stdout=subprocess.PIPE) output, err = process.communicate() exit_code = process.wait() e = time.time() output_str = output.decode('utf-8') print("Python Logging popen exit code :%d"%exit_code) print("Python Logging popen return :%s"%output_str) print("Python Logging Total Processing time:%6.2f"%(e - s))

<op_control.py>

When the Python program is executed, the option value is passed along with the call to openpose.bin. And the output image we want and the json file will be saved in the /usr/local/src/output directory. It's up to you to modify your Python code to do the following with the json files stored in this directory.

root@spypiggy-nx:/usr/local/src/study# python3 op_control.py Python Logging popen exit code :0 Python Logging popen return :Starting OpenPose demo... Configuring OpenPose... Starting thread(s)... Auto-detecting all available GPUs... Detected 1 GPU(s), using 1 of them starting at GPU 0. OpenPose demo successfully finished. Total time: 5.128867 seconds. Python Logging Total Processing time: 5.52

The openpose.bin program alone can implement many functions, but in the next article, we will look at how to implement openpose directly in Python code. In the previous post JetsonNano-Human Pose estimation using OpenPose, some codes do not work as the API is changed on OpenPose 1.7. I'll go ahead and fix some code that doesn't work.

The source code can be downloaded from https://github.com/raspberry-pi-maker/NVIDIA-Jetson.

댓글 없음:

댓글 쓰기