Previous blogs showed how to use Tensorflow, Pytorch Detectron2, DETR, and NVIDIA DNN Vision Library.

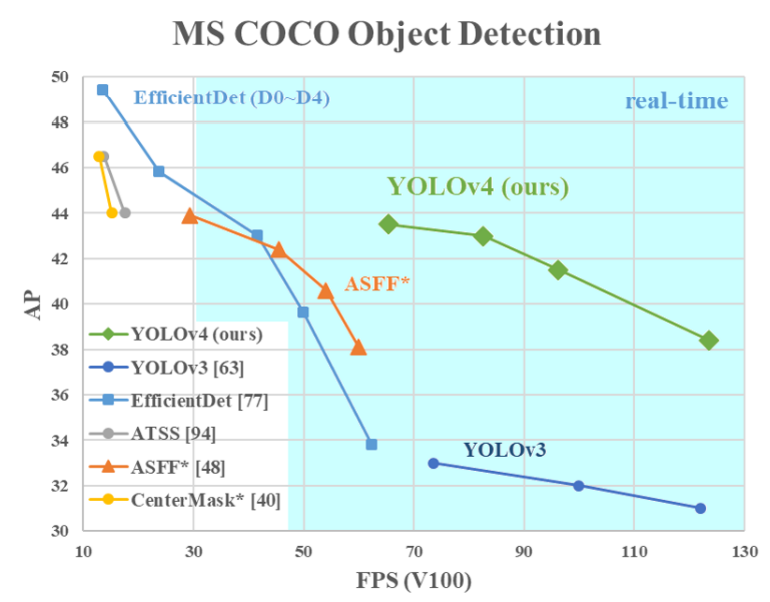

When using video and webcams, the main concerns were whether the recognition was correct on a frame-by-frame basis and processing speed (FPS).

With Object Detection technology, the number of people in one frame can be counted, but the number of people who have passed a distance for an hour cannot be counted without using Object Tracking technology. Object Tracking is diverse. There are many algorithms and many methods like "Centroid Tracking", "SORT(Simple Online and Realtime Tracking)". Among them, SORT is a popular object tracking technology. SORT is highly accurate because it uses a Kalman filter that removes noise when predicting the moving path of an object. Recently, DeepSORT, which adds Deep Learning technology to SORT, is also widely used.

SORT and DeepSORT

- Object Detection : You can use various frameworks such as YOLO, PyTorch Detectron, Tensorflow Object Detection.

- KalmanFilter : After removing noise components from the moving path and speed of the previous object, predict the next position.

- Hungarian algorithm : The Hungarian algorithm determines the movement of the same object, the appearance of new objects, and the disappearance of existing objects by calculating the positions predicted by the Kalman filter and the positions of real objects.

DeepSORT Object Tracking using Tensorflow, YOLOv4

Prerequisites

Please delete Ubuntu Desktop and replace it with LXDE. Then, you can free up about 1 GB of memory.

Securing additional memory by changing the desktop was described in "Use more memory by changing Ububtu desktop to LXDE".

Why should I install TensorFlow?

The extension pb files, market1501.pb, mars.pb, and mars-small128.pb files are models required for DeepSORT. These files work with Tensorflow.

Therefore, Tensorflow must be installed for DeepSORT. If you are using the model for DeepSORT for PyTorch, you might need to install PyTorch instead of Tensorflow.

Install Tensorflow

Install necessary packages

apt-get install liblapack-dev libatlas-base-dev gfotran #numpy to 1.19.0 pip3 install --upgrade numpy pip3 install Keras==2.3.1 pip3 install scikit-learn==0.21.2 pip3 install scipy #scipy to 1.5.0 pip3 install --upgrade scipy

Install yehengchen's github

cd /usr/local/src git clone https://github.com/yehengchen/Object-Detection-and-Tracking.git

## Download the YOLOv4 model

cd Object-Detection-and-Tracking/OneStage/yolo/deep_sort_yolov4/ wget https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights

cd model_data/

cp "/usr/local/src/Object-Detection-and-Tracking/OneStage/yolo/Train-a-YOLOv4-model/cfg/yolov4.cfg" ./

cp yolo_anchors.txt yolo4_anchors.txt

## Convert the YOLOv4 models to Keras model

## If there are not enough memory, the conversion process might fail.

cd ..

python3 convert.py "model_data/yolov4.cfg" "model_data/yolov4.weights" "model_data/yolo.h5"

##Download the test video clip

wget https://git.kpi.fei.tuke.sk/ml163fe/atvi/-/raw/4f70d8fd9c263b5a90dcdbc7a94b1176a520124c/python_objects_detection/TownCentreXVID.avi -O test_video/TownCentreXVID.avi

Object tracking with sample video

- Total 11475 frames, 15 frames/second, 1920 X 1080 frame size.

root@jetpack-4:/usr/local/src/Object-Detection-and-Tracking/OneStage/yolo/deep_sort_yolov4# python3 main.py -c person -i "./test_video/TownCentreXVID.avi" Using TensorFlow backend. 2020-06-30 18:40:19.078024: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcudart.so.10.2 /usr/local/lib/python3.6/dist-packages/sklearn/utils/linear_assignment_.py:21: DeprecationWarning: The linear_assignment_ module is deprecated in 0.21 and will be removed from 0.23. Use scipy.optimize.linear_sum_assignment instead. DeprecationWarning) WARNING:tensorflow:From main.py:27: The name tf.ConfigProto is deprecated. Please use tf.compat.v1.ConfigProto instead. 2020-06-30 18:40:31.516170: W tensorflow/core/platform/profile_utils/cpu_utils.cc:98] Failed to find bogomips in /proc/cpuinfo; cannot determine CPU frequency .......... 2020-06-30 18:41:59.919461: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcudnn.so.8 2020-06-30 18:42:07.822707: W tensorflow/core/common_runtime/bfc_allocator.cc:239] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.75GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available. 2020-06-30 18:42:07.823401: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcublas.so.10 2020-06-30 18:42:15.321959: W tensorflow/core/common_runtime/bfc_allocator.cc:239] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.21GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available. 2020-06-30 18:42:16.545443: W tensorflow/core/common_runtime/bfc_allocator.cc:239] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available. 2020-06-30 18:42:17.661731: W tensorflow/core/common_runtime/bfc_allocator.cc:239] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory were available. .......... Net FPS:0.016420 FPS:0.015778 Net FPS:0.257968 FPS:0.234274 Net FPS:0.321014 FPS:0.291949 Net FPS:0.314152 FPS:0.287686

Wrapping up

Unfortunately, it achieved a low performance of 0.3 FPS. Applying this value to a real-time camera makes object tracking accuracy too low. Therefore, it is inappropriate to use YOLOv4 + DeepSORT in Jetson Nano. Sooner or later, I will make an opportunity to find out how to apply the YOLOv4 tiny model or run this example on the Jetson Xavier NX to speed up.