Prerequisites

I use python virtual environment in Xavier NX. Also, Tensorflow and PyTorch, which are frequently used frameworks, have been installed in the virtual environment in advance. Please proceed with the above process in advance and then follow the instructions below. And I installed DeepStream 5.0 and Python examples from my previous blog.

- Jetson Xavier NX - JetPack 4.4(production release) headless setup

- Jetson Xavier NX - Python virtual environment and ML platforms(tensorflow, Pytorch) installation

- Xavier NX-DeepStream 5.0 #1 - Installation

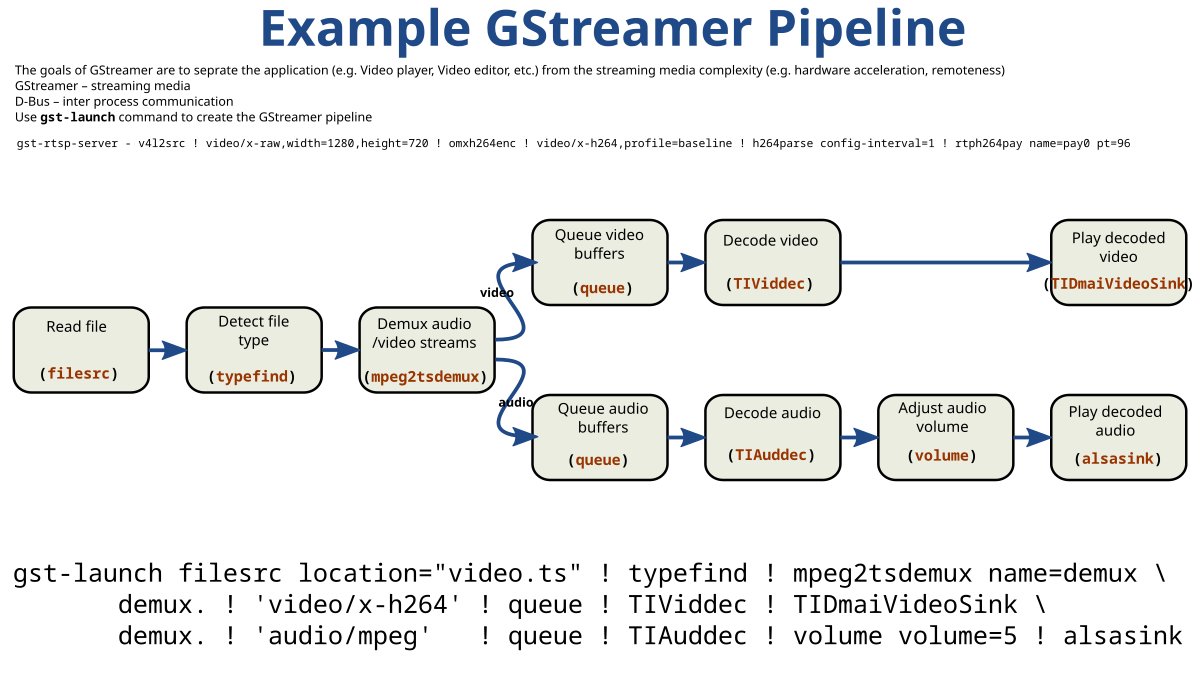

GStreamer Basic

DeepStream uses GStreamer to understand how to process video files or video streaming from the camera. The Jetson series uses GStreamer to use the Raspberry Pi's CSI camera. In the previous post, Camera-CSI Camera (Raspberry Pi camera V2), I briefly explained GStreamer, focusing on the contents necessary to use the camera.

GStreamer

GStreamer is a pipeline-based multimedia framework that links together a wide variety of media processing systems to complete complex workflows. For instance, GStreamer can be used to build a system that reads files in one format, processes them, and exports them in another. The formats and processes can be changed in a plug and play fashion.

GStreamer supports a wide variety of media-handling components, including simple audio playback, audio and video playback, recording, streaming and editing. The pipeline design serves as a base to create many types of multimedia applications such as video editors, transcoders, streaming media broadcasters and media players.

It is designed to work on a variety of operating systems, e.g. Linux kernel-based operating systems, the BSDs, OpenSolaris, Android, macOS, iOS, Windows, OS/400.

GStreamer is free and open-source software subject to the terms of the GNU Lesser General Public License (LGPL)[5] and is being hosted at freedesktop.org.

<from https://en.wikipedia.org/wiki/GStreamer>

The following is an excerpt from the previous post.

gstreamer usage

gst-launch passes the result of the plug-in corresponding to elements to the next element via a link.

For example, in the figure, Element1 can be either a camera plug-in or a source video file, and Element2 can be a plug-in that changes the size of the frame received through Element1, and mirrors front and back. And finally, Element3 can be a plug-in that receives the frame changed by Element2 and prints it to the screen or saves it to a file.

<image from https://en.wikipedia.org/wiki/GStreamer>

GStreamer Elements

After all, all we need to do is create a pipeline by connecting the necessary elements. Therefore, it is necessary to know the elements necessary for processing video files or camera streams.

Most of the elements are in plug-in, and plug-in files are in /usr/lib/aarch64-linux-gnu/gstreamer-1.0.

The elements we will use frequently from the python example source codes:

- v4l2src : v4l2src can be used to capture video from v4l2 devices, like webcams and tv cards.

- filesrc : Read data from a file in the local file system.

- h264parse : Parses H.264 streams

- capsfilter : Pass data without modification, limiting formats. The element does not modify data as such, but can enforce limitations on the data format.

- videoconvert : Convert video frames between a great variety of video formats.

- rtph264pay : Payload-encode H264 video into RTP packets (RFC 3984)

- rtph265pay : Payload-encode H265 video into RTP packets (RFC 7798)

- udpsink : Send data over the network via UDP with packet destinations picked up dynamically from meta on the buffers passed

- queue : Data is queued until one of the limits specified by the “max-size-buffers”, “max-size-bytes” and/or “max-size-time” properties has been reached. Any attempt to push more buffers into the queue will block the pushing thread until more space becomes available.The queue will create a new thread on the source pad to decouple the processing on sink and source pad.You can query how many buffers are queued by reading the “current-level-buffers” property. You can track changes by connecting to the notify::current-level-buffers signal (which like all signals will be emitted from the streaming thread). The same applies to the “current-level-time” and “current-level-bytes” properties.The default queue size limits are 200 buffers, 10MB of data, or one second worth of data, whichever is reached first.As said earlier, the queue blocks by default when one of the specified maximums (bytes, time, buffers) has been reached. You can set the “leaky” property to specify that instead of blocking it should leak (drop) new or old buffers.The “underrun” signal is emitted when the queue has less data than the specified minimum thresholds require (by default: when the queue is empty). The “overrun” signal is emitted when the queue is filled up. Both signals are emitted from the context of the streaming thread.

- tee : Split data to multiple pads. Branching the data flow is useful when e.g. capturing a video where the video is shown on the screen and also encoded and written to a file. Another example is playing music and hooking up a visualisation module.One needs to use separate queue elements (or a multiqueue) in each branch to provide separate threads for each branch. Otherwise a blocked dataflow in one branch would stall the other branches.

- fakesrc : The fakesrc element is a multipurpose element that can generate a wide range of buffers and can operate in various scheduling modes.

- fakesink : Dummy sink that swallows everything.

- capsfilter : Pass data without modification, limiting formats. The element does not modify data as such, but can enforce limitations on the data format.

NVIDIA Proprietary Elements

These are elements developed by NVidia for DeepStream. You can get the manual from NVIDIA DeepStream Plugin Manual , GStreamer Plugin Details. And you can learn more about how to use GStreamer NVidia Plugin in ACCELERATED GSTREAMER USER GUIDE.

- nvv4l2decoder : Video decoder for H.264, H.265, VP8, MPEG4 and MPEG2 formats, uses V4L2 API

- nvvideoconvert : This plugin performs video color format conversion. It accepts NVMM memory as well as RAW (memory allocated using calloc() or malloc() ), and provides NVMM or RAW memory at the output.

- nvstreammux : The nvstreammux plugin forms a batch of frames from multiple input sources. When connecting a source to nvstreammux (the muxer), a new pad must be requested from the muxer using gst_element_get_request_pad() and the pad template "sink_%u".

- nvinfer : The nvinfer plugin does inferencing on input data using NVIDIA® TensorRT™.

- nvvideoconvert : This plugin performs video color format conversion. It accepts NVMM memory as well as RAW (memory allocated using calloc() or malloc() ), and provides NVMM or RAW memory at the output.

- nvdsosd : This plugin draws bounding boxes, text, and region of interest (RoI) polygons. (Polygons are presented as a set of lines.)

- nvtracker : This plugin tracks detected objects and gives each new object a unique ID.

- nvmsgconv : The nvmsgconv plugin parses NVDS_EVENT_MSG_META (NvDsEventMsgMeta) type metadata attached to the buffer as user metadata of frame meta and generates the schema payload. For the batched buffer, metadata of all objects of a frame must be under the corresponding frame meta.nvmsgbroker : This plugin sends payload messages to the server using a specified communication protocol. It accepts any buffer that has NvDsPayload metadata attached and uses the nvds_msgapi_* interface to send the messages to the server. You must implement the nvds_msgapi_* interface for the protocol to be used and specify the implementing library in the proto-lib property.nvegltransform

- nveglglessink : Windowed video playback, NVIDIA EGL/GLES videosinkusing default X11 backend

- nvv4l2h264enc : H.264 Encode (NVIDIA Accelerated Encode)

- nvv4l2h265enc : H.265 Encode (NVIDIA Accelerated Encode)

For developers looking to build their custom application, the ‘deepstream-app’

can be a bit overwhelming to start development. The SDK ships with

several simple applications, where developers can learn about basic

concepts of DeepStream, constructing a simple pipeline and then

progressing to build more complex applications.

Example 1 - H264 video file streaming

The first example code is in deepstream_python_apps/apps/ deepstream-test1, deepstream-test1-rtsp-out, deepstream-test1-usbcam.

spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1$ ls -al total 28 drwxrwxr-x 2 spypiggy spypiggy 4096 Aug 8 08:05 . drwxrwxr-x 11 spypiggy spypiggy 4096 Aug 6 06:57 .. -rw-rw-r-- 1 spypiggy spypiggy 10499 Aug 8 08:05 deepstream_test_1.py -rw-rw-r-- 1 spypiggy spypiggy 3933 Aug 8 07:43 dstest1_pgie_config.txt -rw-rw-r-- 1 spypiggy spypiggy 2607 Aug 6 06:57 README

Configuration

[property] gpu-id=0 net-scale-factor=0.0039215697906911373 model-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/resnet10.caffemodel #model-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel proto-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/resnet10.prototxt #proto-file=../../../../samples/models/Primary_Detector/resnet10.prototxt #model-engine-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_int8.engine #model-engine-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_int8.engine labelfile-path=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/labels.txt #labelfile-path=../../../../samples/models/Primary_Detector/labels.txt int8-calib-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/cal_trt.bin #int8-calib-file=../../../../samples/models/Primary_Detector/cal_trt.bin

PipeLine

The elements to be looked at carefully are as follows.

- nvinfer : This element stands for NVidia Inference. In other words, it plays a key role in receiving the result by inputting the inference frame to the actual AI model. The nvinfer element has'config-file-path property', and the configuration file is specified in this value. In the example, dstest1_pgie_config.txt file is used. In this file, properties such as the name, location, and label file of the model to be used are specified. We have already modified this file earlier.

- nvdsosd : As described above, the nvdsosd element draws the result of the nvinfer element on the screen. When the object we are looking for is found, it plays the role of printing text in the relevant area or drawing a box in the region of interest (ROI). The reason this element is important is not because it draws the results to the image like this, but because it allows you to connect the probe. In order to use DeepStream the way we want, the screen output is not important, but we need to be able to use information such as the results (type, location, and accuracy of things) created by nvinfer. As shown in the picture above, the nvdsosd element provides the function of delivering the information currently drawing through the sink pad. We can connect a callback function called'osd_sink_pad_buffer_probe' using the add_probe method to receive information drawn on the screen in real time.

Test

#!/usr/bin/env python3 ################################################################################ # Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved. # # Permission is hereby granted, free of charge, to any person obtaining a # copy of this software and associated documentation files (the "Software"), # to deal in the Software without restriction, including without limitation # the rights to use, copy, modify, merge, publish, distribute, sublicense, # and/or sell copies of the Software, and to permit persons to whom the # Software is furnished to do so, subject to the following conditions: # # The above copyright notice and this permission notice shall be included in # all copies or substantial portions of the Software. # # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL # THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER # DEALINGS IN THE SOFTWARE. ################################################################################ import sys, time sys.path.append('../') import gi gi.require_version('Gst', '1.0') from gi.repository import GObject, Gst from common.is_aarch_64 import is_aarch64 from common.bus_call import bus_call import pyds PGIE_CLASS_ID_VEHICLE = 0 PGIE_CLASS_ID_BICYCLE = 1 PGIE_CLASS_ID_PERSON = 2 PGIE_CLASS_ID_ROADSIGN = 3 start = time.time() def osd_sink_pad_buffer_probe(pad,info,u_data): global start frame_number=0 #Intiallizing object counter with 0. obj_counter = { PGIE_CLASS_ID_VEHICLE:0, PGIE_CLASS_ID_PERSON:0, PGIE_CLASS_ID_BICYCLE:0, PGIE_CLASS_ID_ROADSIGN:0 } num_rects=0 gst_buffer = info.get_buffer() if not gst_buffer: print("Unable to get GstBuffer ") return # Retrieve batch metadata from the gst_buffer # Note that pyds.gst_buffer_get_nvds_batch_meta() expects the # C address of gst_buffer as input, which is obtained with hash(gst_buffer) batch_meta = pyds.gst_buffer_get_nvds_batch_meta(hash(gst_buffer)) l_frame = batch_meta.frame_meta_list while l_frame is not None: now = time.time() try: # Note that l_frame.data needs a cast to pyds.NvDsFrameMeta # The casting is done by pyds.glist_get_nvds_frame_meta() # The casting also keeps ownership of the underlying memory # in the C code, so the Python garbage collector will leave # it alone. #frame_meta = pyds.glist_get_nvds_frame_meta(l_frame.data) frame_meta = pyds.NvDsFrameMeta.cast(l_frame.data) except StopIteration: break frame_number=frame_meta.frame_num num_rects = frame_meta.num_obj_meta l_obj=frame_meta.obj_meta_list while l_obj is not None: try: # Casting l_obj.data to pyds.NvDsObjectMeta #obj_meta=pyds.glist_get_nvds_object_meta(l_obj.data) obj_meta=pyds.NvDsObjectMeta.cast(l_obj.data) except StopIteration: break obj_counter[obj_meta.class_id] += 1 obj_meta.rect_params.border_color.set(0.0, 0.0, 1.0, 0.0) try: l_obj=l_obj.next except StopIteration: break # Acquiring a display meta object. The memory ownership remains in # the C code so downstream plugins can still access it. Otherwise # the garbage collector will claim it when this probe function exits. display_meta=pyds.nvds_acquire_display_meta_from_pool(batch_meta) display_meta.num_labels = 1 py_nvosd_text_params = display_meta.text_params[0] # Setting display text to be shown on screen # Note that the pyds module allocates a buffer for the string, and the # memory will not be claimed by the garbage collector. # Reading the display_text field here will return the C address of the # allocated string. Use pyds.get_string() to get the string content. py_nvosd_text_params.display_text = "Frame Number={} Number of Objects={} Vehicle_count={} Person_count={} FPS={}".format(frame_number, num_rects, obj_counter[PGIE_CLASS_ID_VEHICLE], obj_counter[PGIE_CLASS_ID_PERSON], (1 / (now - start))) # Now set the offsets where the string should appear py_nvosd_text_params.x_offset = 10 py_nvosd_text_params.y_offset = 12 # Font , font-color and font-size py_nvosd_text_params.font_params.font_name = "Serif" py_nvosd_text_params.font_params.font_size = 10 # set(red, green, blue, alpha); set to White py_nvosd_text_params.font_params.font_color.set(1.0, 1.0, 1.0, 1.0) # Text background color py_nvosd_text_params.set_bg_clr = 1 # set(red, green, blue, alpha); set to Black py_nvosd_text_params.text_bg_clr.set(0.0, 0.0, 0.0, 1.0) # Using pyds.get_string() to get display_text as string print(pyds.get_string(py_nvosd_text_params.display_text)) pyds.nvds_add_display_meta_to_frame(frame_meta, display_meta) try: l_frame=l_frame.next except StopIteration: break start = now return Gst.PadProbeReturn.OK def main(args): # Check input arguments if len(args) != 2: sys.stderr.write("usage: %s <media file or uri>\n" % args[0]) sys.exit(1) # Standard GStreamer initialization GObject.threads_init() Gst.init(None) # Create gstreamer elements # Create Pipeline element that will form a connection of other elements print("Creating Pipeline \n ") pipeline = Gst.Pipeline() if not pipeline: sys.stderr.write(" Unable to create Pipeline \n") # Source element for reading from the file print("Creating Source \n ") source = Gst.ElementFactory.make("filesrc", "file-source") if not source: sys.stderr.write(" Unable to create Source \n") # Since the data format in the input file is elementary h264 stream, # we need a h264parser print("Creating H264Parser \n") h264parser = Gst.ElementFactory.make("h264parse", "h264-parser") if not h264parser: sys.stderr.write(" Unable to create h264 parser \n") # Use nvdec_h264 for hardware accelerated decode on GPU print("Creating Decoder \n") decoder = Gst.ElementFactory.make("nvv4l2decoder", "nvv4l2-decoder") if not decoder: sys.stderr.write(" Unable to create Nvv4l2 Decoder \n") # Create nvstreammux instance to form batches from one or more sources. streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer") if not streammux: sys.stderr.write(" Unable to create NvStreamMux \n") # Use nvinfer to run inferencing on decoder's output, # behaviour of inferencing is set through config file pgie = Gst.ElementFactory.make("nvinfer", "primary-inference") if not pgie: sys.stderr.write(" Unable to create pgie \n") # Use convertor to convert from NV12 to RGBA as required by nvosd nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor") if not nvvidconv: sys.stderr.write(" Unable to create nvvidconv \n") # Create OSD to draw on the converted RGBA buffer nvosd = Gst.ElementFactory.make("nvdsosd", "onscreendisplay") if not nvosd: sys.stderr.write(" Unable to create nvosd \n") # Finally render the osd output if is_aarch64(): transform = Gst.ElementFactory.make("nvegltransform", "nvegl-transform") print("Creating EGLSink \n") sink = Gst.ElementFactory.make("nveglglessink", "nvvideo-renderer") if not sink: sys.stderr.write(" Unable to create egl sink \n") print("Playing file %s " %args[1]) source.set_property('location', args[1]) #streammux.set_property('width', 1920) #streammux.set_property('height', 1080) streammux.set_property('width', 1024) streammux.set_property('height', 768) streammux.set_property('batch-size', 1) streammux.set_property('batched-push-timeout', 4000000) pgie.set_property('config-file-path', "dstest1_pgie_config.txt") #pgie.set_property('config-file-path', "../deepstream-test1-usbcam/dstest1_pgie_config.txt") print("Adding elements to Pipeline \n") pipeline.add(source) pipeline.add(h264parser) pipeline.add(decoder) pipeline.add(streammux) pipeline.add(pgie) pipeline.add(nvvidconv) pipeline.add(nvosd) pipeline.add(sink) if is_aarch64(): pipeline.add(transform) # we link the elements together # file-source -> h264-parser -> nvh264-decoder -> # nvinfer -> nvvidconv -> nvosd -> video-renderer print("Linking elements in the Pipeline \n") source.link(h264parser) h264parser.link(decoder) sinkpad = streammux.get_request_pad("sink_0") if not sinkpad: sys.stderr.write(" Unable to get the sink pad of streammux \n") srcpad = decoder.get_static_pad("src") if not srcpad: sys.stderr.write(" Unable to get source pad of decoder \n") srcpad.link(sinkpad) streammux.link(pgie) pgie.link(nvvidconv) nvvidconv.link(nvosd) if is_aarch64(): nvosd.link(transform) transform.link(sink) else: nvosd.link(sink) # create an event loop and feed gstreamer bus mesages to it loop = GObject.MainLoop() bus = pipeline.get_bus() bus.add_signal_watch() bus.connect ("message", bus_call, loop) # Lets add probe to get informed of the meta data generated, we add probe to # the sink pad of the osd element, since by that time, the buffer would have # had got all the metadata. osdsinkpad = nvosd.get_static_pad("sink") if not osdsinkpad: sys.stderr.write(" Unable to get sink pad of nvosd \n") osdsinkpad.add_probe(Gst.PadProbeType.BUFFER, osd_sink_pad_buffer_probe, 0) # start play back and listen to events print("Starting pipeline \n") pipeline.set_state(Gst.State.PLAYING) try: loop.run() except: pass # cleanup pipeline.set_state(Gst.State.NULL) if __name__ == '__main__': sys.exit(main(sys.argv))

spypiggy@XavierNX:~$ cd /opt/nvidia/deepstream/deepstream/samples/streams/ spypiggy@XavierNX:/opt/nvidia/deepstream/deepstream/samples/streams$ ls -al total 278108 drwxr-xr-x 2 root root 4096 Aug 6 08:12 . drwxr-xr-x 6 root root 4096 Aug 6 08:12 .. -rw-r--r-- 1 root root 34952625 Jul 27 04:19 sample_1080p_h264.mp4 -rw-r--r-- 1 root root 14018396 Jul 27 04:19 sample_1080p_h265.mp4 -rw-r--r-- 1 root root 14759548 Jul 27 04:19 sample_720p.h264 -rw-r--r-- 1 root root 59724 Jul 27 04:19 sample_720p.jpg -rw-r--r-- 1 root root 50434312 Jul 27 04:19 sample_720p.mjpeg -rw-r--r-- 1 root root 15574709 Jul 27 04:19 sample_720p.mp4 -rw-r--r-- 1 root root 24999084 Jul 27 04:19 sample_cam6.mp4 -rw-r--r-- 1 root root 152372 Jul 27 04:19 sample_industrial.jpg -rw-r--r-- 1 root root 17965863 Jul 27 04:19 sample_qHD.h264 -rw-r--r-- 1 root root 17974199 Jul 27 04:19 sample_qHD.mp4 -rw-r--r-- 1 root root 447675 Jul 27 04:19 yoga.jpg -rw-r--r-- 1 root root 93399577 Jul 27 04:19 yoga.mp4

spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1$ source /home/spypiggy/python/bin/activate (python) spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1$python3 deepstream_test_1.py /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.h264

spypiggy@XavierNX:~$ lsusb Bus 002 Device 002: ID 0bda:0489 Realtek Semiconductor Corp. Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 001 Device 003: ID 13d3:3549 IMC Networks Bus 001 Device 005: ID 046d:0892 Logitech, Inc. OrbiCam Bus 001 Device 004: ID 1ddd:1151 Bus 001 Device 002: ID 0bda:5489 Realtek Semiconductor Corp. Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

spypiggy@XavierNX:~$ ls /dev/video* /dev/video0

spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1$ cd ../deepstream-test1-usb

spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1-usb$ source /home/spypiggy/python/bin/activate (python) spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1-usb$ python3 deepstream_test_1_usb.py /dev/video0

Example 2 - MP4 video file streaming

The first example was using a raw format video file encoded in h264 codec. This example will use commonly available mp4 video files. The h264 file can be encoded and decoded using hardware acceleration in the Jetson Xavier, making it much more efficient than other video formats. However, most video files have video file information in the header section of the file, such as mp4, avi, and mov, not in raw format. To use the mp4 file, you only need to modify some of the pipeline sections in the first example.

PipeLine

Use uridecodebin instead of filesrc, h264parser, and nvvv4l2decoder used in the h264 example. The difference is that uridecodebin is used instead of filesrc, h264parser, and nvvv4l2decoder used in the h264 example. uridecodebin can use a variety of sources, including online files and rtsp streaming, in url format as well as files on the url format. The usage of uridecodebin is detailed at https://gstreamer.freedesktop.org/documentation/playback/uridecodebin.html?gi-language=python.

Configuration

Test

#!/usr/bin/env python3 ################################################################################ # Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved. # # Permission is hereby granted, free of charge, to any person obtaining a # copy of this software and associated documentation files (the "Software"), # to deal in the Software without restriction, including without limitation # the rights to use, copy, modify, merge, publish, distribute, sublicense, # and/or sell copies of the Software, and to permit persons to whom the # Software is furnished to do so, subject to the following conditions: # # The above copyright notice and this permission notice shall be included in # all copies or substantial portions of the Software. # # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL # THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER # DEALINGS IN THE SOFTWARE. ################################################################################ import sys, time sys.path.append('../') import gi gi.require_version('Gst', '1.0') from gi.repository import GObject, Gst from common.is_aarch_64 import is_aarch64 from common.bus_call import bus_call import pyds PGIE_CLASS_ID_VEHICLE = 0 PGIE_CLASS_ID_BICYCLE = 1 PGIE_CLASS_ID_PERSON = 2 PGIE_CLASS_ID_ROADSIGN = 3 start = time.time() prt = True def cb_newpad(decodebin, decoder_src_pad,data): print("In cb_newpad\n") caps=decoder_src_pad.get_current_caps() gststruct=caps.get_structure(0) gstname=gststruct.get_name() source_bin=data features=caps.get_features(0) # Need to check if the pad created by the decodebin is for video and not # audio. print("gstname=",gstname) if(gstname.find("video")!=-1): # Link the decodebin pad only if decodebin has picked nvidia # decoder plugin nvdec_*. We do this by checking if the pad caps contain # NVMM memory features. print("features=",features) if features.contains("memory:NVMM"): # Get the source bin ghost pad bin_ghost_pad=source_bin.get_static_pad("src") if not bin_ghost_pad.set_target(decoder_src_pad): sys.stderr.write("Failed to link decoder src pad to source bin ghost pad\n") else: sys.stderr.write(" Error: Decodebin did not pick nvidia decoder plugin.\n") def decodebin_child_added(child_proxy,Object,name,user_data): print("Decodebin child added:", name, "\n") if(name.find("decodebin") != -1): Object.connect("child-added",decodebin_child_added,user_data) if(is_aarch64() and name.find("nvv4l2decoder") != -1): print("Seting bufapi_version\n") Object.set_property("bufapi-version",True) def osd_sink_pad_buffer_probe(pad,info,u_data): global start, prt frame_number=0 #Intiallizing object counter with 0. obj_counter = { PGIE_CLASS_ID_VEHICLE:0, PGIE_CLASS_ID_PERSON:0, PGIE_CLASS_ID_BICYCLE:0, PGIE_CLASS_ID_ROADSIGN:0 } num_rects=0 gst_buffer = info.get_buffer() if not gst_buffer: print("Unable to get GstBuffer ") return # Retrieve batch metadata from the gst_buffer # Note that pyds.gst_buffer_get_nvds_batch_meta() expects the # C address of gst_buffer as input, which is obtained with hash(gst_buffer) batch_meta = pyds.gst_buffer_get_nvds_batch_meta(hash(gst_buffer)) l_frame = batch_meta.frame_meta_list while l_frame is not None: now = time.time() try: # Note that l_frame.data needs a cast to pyds.NvDsFrameMeta # The casting is done by pyds.glist_get_nvds_frame_meta() # The casting also keeps ownership of the underlying memory # in the C code, so the Python garbage collector will leave # it alone. #frame_meta = pyds.glist_get_nvds_frame_meta(l_frame.data) frame_meta = pyds.NvDsFrameMeta.cast(l_frame.data) except StopIteration: break frame_number=frame_meta.frame_num num_rects = frame_meta.num_obj_meta l_obj=frame_meta.obj_meta_list while l_obj is not None: try: # Casting l_obj.data to pyds.NvDsObjectMeta #obj_meta=pyds.glist_get_nvds_object_meta(l_obj.data) obj_meta=pyds.NvDsObjectMeta.cast(l_obj.data) #print('mask_params={}'.format(type(obj_meta.mask_params))) #Not binded ''' print(' rect_params bg_color alpha={}'.format(type(obj_meta.rect_params.bg_color))) print(' rect_params border_width={}'.format(type(obj_meta.rect_params.border_width))) print(' rect_params border_width={}'.format(obj_meta.rect_params.border_width)) print(' rect_params color_id={}'.format(type(obj_meta.rect_params.color_id))) print(' rect_params color_id={}'.format(obj_meta.rect_params.color_id)) print(' rect_params has_color_info={}'.format(type(obj_meta.rect_params.has_color_info))) ''' except StopIteration: break obj_meta.rect_params.has_bg_color = 1 obj_meta.rect_params.bg_color.set(0.0, 0.0, 1.0, 0.2) #It seems that only the alpha channel is working. RGB value is reflected. obj_counter[obj_meta.class_id] += 1 obj_meta.rect_params.border_color.set(0.0, 1.0, 1.0, 0.0) # It seems that only the alpha channel is not working. (red, green, blue , alpha) try: l_obj=l_obj.next except StopIteration: break # Acquiring a display meta object. The memory ownership remains in # the C code so downstream plugins can still access it. Otherwise # the garbage collector will claim it when this probe function exits. display_meta=pyds.nvds_acquire_display_meta_from_pool(batch_meta) display_meta.num_labels = 1 py_nvosd_text_params = display_meta.text_params[0] # Setting display text to be shown on screen # Note that the pyds module allocates a buffer for the string, and the # memory will not be claimed by the garbage collector. # Reading the display_text field here will return the C address of the # allocated string. Use pyds.get_string() to get the string content. py_nvosd_text_params.display_text = "Frame Number={} Number of Objects={} Vehicle_count={} Person_count={} FPS={}".format(frame_number, num_rects, obj_counter[PGIE_CLASS_ID_VEHICLE], obj_counter[PGIE_CLASS_ID_PERSON], (1 / (now - start))) # Now set the offsets where the string should appear py_nvosd_text_params.x_offset = 10 py_nvosd_text_params.y_offset = 12 # Font , font-color and font-size py_nvosd_text_params.font_params.font_name = "/usr/share/fonts/truetype/dejavu/DejaVuSans-Bold.ttf" py_nvosd_text_params.font_params.font_size = 20 # set(red, green, blue, alpha); set to White py_nvosd_text_params.font_params.font_color.set(0.2, 0.2, 1.0, 1) # (red, green, blue , alpha) # Text background color py_nvosd_text_params.set_bg_clr = 1 # set(red, green, blue, alpha); set to Black py_nvosd_text_params.text_bg_clr.set(0.2, 0.2, 0.2, 0.3) # Using pyds.get_string() to get display_text as string if prt: print(pyds.get_string(py_nvosd_text_params.display_text)) pyds.nvds_add_display_meta_to_frame(frame_meta, display_meta) try: l_frame=l_frame.next except StopIteration: break prt = False start = now return Gst.PadProbeReturn.OK #DROP, HANDLED, OK, PASS, REMOVE def main(args): # Check input arguments if len(args) != 2: sys.stderr.write("usage: %s <media file or uri>\n" % args[0]) sys.exit(1) # Standard GStreamer initialization GObject.threads_init() Gst.init(None) # Create gstreamer elements # Create Pipeline element that will form a connection of other elements print("Creating Pipeline \n ") pipeline = Gst.Pipeline() if not pipeline: sys.stderr.write(" Unable to create Pipeline \n") bin_name="source-bin" source_bin=Gst.Bin.new(bin_name) if not source_bin: sys.stderr.write(" Unable to create source bin \n") uri_decode_bin=Gst.ElementFactory.make("uridecodebin", "uri-decode-bin") if not uri_decode_bin: sys.stderr.write(" Unable to create uri decode bin \n") uri_decode_bin.set_property("uri",args[1]) uri_decode_bin.connect("pad-added",cb_newpad,source_bin) uri_decode_bin.connect("child-added",decodebin_child_added,source_bin) Gst.Bin.add(source_bin,uri_decode_bin) source_bin.add_pad(Gst.GhostPad.new_no_target("src",Gst.PadDirection.SRC)) # Create nvstreammux instance to form batches from one or more sources. streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer") if not streammux: sys.stderr.write(" Unable to create NvStreamMux \n") pipeline.add(streammux) pipeline.add(source_bin) sinkpad= streammux.get_request_pad("sink_0") if not sinkpad: sys.stderr.write("Unable to create sink pad bin \n") srcpad=source_bin.get_static_pad("src") if not srcpad: sys.stderr.write("Unable to create src pad bin \n") srcpad.link(sinkpad) streammux.set_property('width', 1920) streammux.set_property('height', 1080) streammux.set_property('batch-size', 1) streammux.set_property('batched-push-timeout', 4000000) # Use nvinfer to run inferencing on decoder's output, # behaviour of inferencing is set through config file pgie = Gst.ElementFactory.make("nvinfer", "primary-inference") if not pgie: sys.stderr.write(" Unable to create pgie \n") # Use convertor to convert from NV12 to RGBA as required by nvosd nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor") if not nvvidconv: sys.stderr.write(" Unable to create nvvidconv \n") # Create OSD to draw on the converted RGBA buffer nvosd = Gst.ElementFactory.make("nvdsosd", "onscreendisplay") if not nvosd: sys.stderr.write(" Unable to create nvosd \n") # Finally render the osd output if is_aarch64(): transform = Gst.ElementFactory.make("nvegltransform", "nvegl-transform") print("Creating EGLSink \n") sink = Gst.ElementFactory.make("nveglglessink", "nvvideo-renderer") if not sink: sys.stderr.write(" Unable to create egl sink \n") sink.set_property("qos",0) pipeline.add(pgie) pipeline.add(nvvidconv) pipeline.add(nvosd) if is_aarch64(): pipeline.add(transform) pipeline.add(sink) pgie.set_property('config-file-path', "dstest1_pgie_config.txt") pgie_batch_size=pgie.get_property("batch-size") if(pgie_batch_size != 1): print("WARNING: Overriding infer-config batch-size",pgie_batch_size," with number of sources ", number_sources," \n") print("Linking elements in the Pipeline \n") streammux.link(pgie) pgie.link(nvvidconv) nvvidconv.link(nvosd) if is_aarch64(): nvosd.link(transform) transform.link(sink) else: nvosd.link(sink) # create an event loop and feed gstreamer bus mesages to it loop = GObject.MainLoop() bus = pipeline.get_bus() bus.add_signal_watch() bus.connect ("message", bus_call, loop) # Lets add probe to get informed of the meta data generated, we add probe to # the sink pad of the osd element, since by that time, the buffer would have # had got all the metadata. osdsinkpad = nvosd.get_static_pad("sink") if not osdsinkpad: sys.stderr.write(" Unable to get sink pad of nvosd \n") osdsinkpad.add_probe(Gst.PadProbeType.BUFFER, osd_sink_pad_buffer_probe, 0) # start play back and listen to events print("Starting pipeline \n") pipeline.set_state(Gst.State.PLAYING) try: loop.run() except: pass # cleanup pipeline.set_state(Gst.State.NULL) cv2.destroyAllWindows() if __name__ == '__main__': sys.exit(main(sys.argv))

spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1$ source /home/spypiggy/python/bin/activate (python) spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test1$ python3 deepstream_test_1_mp4.py file:////opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4

Example 3 - Face Detect-IR Model

DeepStream provides a model for face recognition. If the installation location of DeepStream is /opt/nvidia/deepstream/deepstream-5.0, then configurations exist in the directory /opt/nvidia/deepstream/deepstream-5.0/samples/configs/tlt_pretrained_modelsCopy the config_infer_primary_facedetectir.txt file from here to use. Correctly modify the location of model, label file, etc. And you only need to change the name of the configuration file in the deepstream_test1.py file.

https://youtu.be/XkX2x6EhCfg

안녕하세요. This is a great write up of Jetson & Deepstream. Are you doing this for fun or is this for work?

답글삭제Would love to connect and chat more!

Hi jaepaik.

삭제I'm doing Jetson related work for my fun.

I'm workig on VoIP(Telephony) area.

If you connect me with E-mail, I'll reply.

Thanks a lot.

PS: If you are Korean, 한글로 메일 보내시면 됩니다.

Best Regards.

Great article ! Is it possible that in your drawings you switched src and sink

답글삭제Thanks. I fixed the image.

삭제