I do not recommend applying YOLOv4 to DeepStream 5.0. The following article is an experimental test of YOLOv4. The YOLO versions currently officially supported by DeepStream 5.0 are 2 and 3. Using VOLOV3 is the easiest.

So far, I have mainly used primary detector using resnet10, caffe model. This model recognizes four things: people, cars, bicycles, and road signs. YoloV4 is claims to have state-of-the-art accuracy while maintains a high processing frame rate. It achieves an accuracy of 43.5% AP (65.7% AP₅₀) for the MS COCO with an approximately 65 FPS inference speed on Tesla V100. You can find more information on YOLOv4 on YOLOv4 github .

<YOLOv4 Performance>

I have described in my previous blog the installation and implementation of YOLOv4 in Jetson Nano. These contents can be applied without change to Xavier NX.

- Jetson Nano - YoloV4 Installation

- Jetson Nano - YoloV4 Python implementation

- Jetson Nano - YoloV4 Object Tracking

Prerequisites

Several blogs have explained how to implement DeepStream using Python in Xavier NX. Since DeepStream can be used in all Jetson series using JetPack, the contents described here can be used in Jetson Nano, TX2, and Xavier. This time, we will cover the necessary contents to apply the DeepStream installed and tested earlier to your application. Please be sure to read the preceding articles first.

- Jetson Xavier NX - JetPack 4.4(production release) headless setup

- Jetson Xavier NX - Python virtual environment and ML platforms(tensorflow, Pytorch) installation

- Xavier NX-DeepStream 5.0 #1 - Installation

- Xavier NX-DeepStream 5.0 #2 - Run Python Samples(test1)

- Xavier NX-DeepStream 5.0 #3 - Run Python Samples (test2)

- Xavier NX-DeepStream 5.0 #4 - Handling Primary, Secondary Inference Results

DeepStream 5.0 only supports VOLOV3. Therefore, supporting YOLOV4 in DeepStream 5.0 requires some work.

To create the TensorRT model required by DeepStream, the following steps are required.

- Convert the Yolov4 Darknet model to standard ONNX.

- Convert ONNX model to TensorRT model.

Create YOLOV4 TensorRT Model

First, download YOLOv4 from Tianxiaomo' github, which is implemented as a PyTorch model and provides conversion scripts.

#First activate python virtual environment spypiggy@XavierNX:~$ source /home/spypiggy/python/bin/activate (python) spypiggy@XavierNX:~$ cd src #Download PyTorch version of YOLOv4 to converting ONNX model, TensorRT model (python) spypiggy@XavierNX:~/src$ git clone https://github.com/Tianxiaomo/pytorch-YOLOv4.git (python) spypiggy@XavierNX:~/src$ cd pytorch-YOLOv4 (python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ pip3 install onnxruntime (python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ wget https://github.com/AlexeyAB/darknet/releases/rknet_yolo_v3_optimal/yolov4.weights

Convert DarkNet Model to ONNX Model

Demo_darknet2onnx in the phytorch-YOLOV4 directory.Convert to ONNX model using py file. This command creates a new yolov4_1_3_608_608_static.onnx file.

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ python3 demo_darknet2onnx.py ./cfg/yolov4.cfg yolov4.weights ./data/giraffe.jpg 1

Convert ONNX Model to TensorRT Model

If the yolov4_1_3_608_608_608_static.onnx file is created properly, the next step is to convert this model to a TensorRT model. This process takes a lot of time. You can have a coffee break.

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ /usr/src/tensorrt/bin/trtexec --onnx=yolov4_1_3_608_608_static.onnx --explicitBatch --saveEngine=yolov4_1_3_608_608_fp16.engine --workspace=4096 --fp16

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ ls -al total 639520 drwxrwxr-x 7 spypiggy spypiggy 4096 Aug 16 22:49 . drwxr-xr-x 13 spypiggy spypiggy 4096 Aug 16 10:44 .. drwxrwxr-x 2 spypiggy spypiggy 4096 Aug 16 21:50 cfg -rw-rw-r-- 1 spypiggy spypiggy 1573 Aug 16 10:44 cfg.py drwxrwxr-x 2 spypiggy spypiggy 4096 Aug 16 10:44 data -rw-rw-r-- 1 spypiggy spypiggy 16988 Aug 16 10:44 dataset.py drwxrwxr-x 3 spypiggy spypiggy 4096 Aug 16 11:42 DeepStream -rw-rw-r-- 1 spypiggy spypiggy 2223 Aug 16 10:44 demo_darknet2onnx.py -rw-rw-r-- 1 spypiggy spypiggy 4588 Aug 16 10:44 demo.py -rw-rw-r-- 1 spypiggy spypiggy 3456 Aug 16 10:44 demo_pytorch2onnx.py -rw-rw-r-- 1 spypiggy spypiggy 2974 Aug 16 10:44 demo_tensorflow.py -rw-rw-r-- 1 spypiggy spypiggy 7191 Aug 16 10:44 demo_trt.py -rw-rw-r-- 1 spypiggy spypiggy 11814 Aug 16 10:44 evaluate_on_coco.py drwxrwxr-x 8 spypiggy spypiggy 4096 Aug 16 10:44 .git -rw-rw-r-- 1 spypiggy spypiggy 131 Aug 16 10:44 .gitignore -rw-rw-r-- 1 spypiggy spypiggy 11560 Aug 16 10:44 License.txt -rw-rw-r-- 1 spypiggy spypiggy 17061 Aug 16 10:44 models.py -rw-rw-r-- 1 spypiggy spypiggy 237646 Aug 16 10:57 predictions_onnx.jpg -rw-rw-r-- 1 spypiggy spypiggy 10566 Aug 16 10:44 README.md -rw-rw-r-- 1 spypiggy spypiggy 158 Aug 16 10:44 requirements.txt drwxrwxr-x 4 spypiggy spypiggy 4096 Aug 16 10:55 tool -rw-rw-r-- 1 spypiggy spypiggy 28042 Aug 16 10:44 train.py -rw-rw-r-- 1 spypiggy spypiggy 3111 Aug 16 10:44 Use_yolov4_to_train_your_own_data.md -rw-rw-r-- 1 spypiggy spypiggy 138693567 Aug 16 12:58 yolov4_1_3_608_608_fp16.engine -rw-rw-r-- 1 spypiggy spypiggy 258030612 Aug 16 10:57 yolov4_1_3_608_608_static.onnx -rw-rw-r-- 1 spypiggy spypiggy 257717640 Apr 27 08:35 yolov4.weights

If no errors have occurred, you can check the newly created yolov4_1_3_608_608_fp16. engine, yolov4_1_3_608_608_static.onnx file. I will use the model yolov4_1_3_608_608_fp16. engine model made for TensorRT.

Rebuild objectDetector_Yolo

DeepStream provides plug-ins that can use YOLOv2, v3. We need to rebuild this code after some modification to use YOLOv4. The source code location is /opt/nvidia/deepstream/sources/objectDetector_Yolo. The previously downloaded https://github.com/Tianxiaomo/pytorch-YOLOv4 also provides a source code that has modified YOLOV4 to be used in DeepStream 5.0. Anything is fine, but I will modify the source code of the directory where DeepStream 5.0 was installed. The two source codes are essentially the same and I used most of the Tianxiao code. However, only a few changes have been made to maintain the coding style with the existing file.

- If you wan to use the original source code, modify the /opt/nvidia/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo/nvdsparsebbox_Yolo.cpp.

- If you want to use the Tianxiaomo's github code, build without changes in the /home/spypiggy/src/pytorch-YOLOv4/DeepStream/nvdsinfer_custom_impl_Yolo/ directory.

You can download the source code(nvdsparsebbox_Yolo.cpp) at https://github.com/raspberry-pi-maker/NVIDIA-Jetson/tree/master/DeepStream 5.0.

Makefile to be used for build requires environment variable CUDA_VER. Because we are using JetPack 4.4, the CUDA version is 10.2.

Therefore, export the environment variable CUDA_VER before building as follows.

spypiggy@XavierNX:~$cd /opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo spypiggy@XavierNX:/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo$ export CUDA_VER=10.2 #Before build, copy the nvdsparsebbox_Yolo.cpp from https://github.com/raspberry-pi-maker/NVIDIA-Jetson/tree/master/DeepStream 5.0 spypiggy@XavierNX:/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo$ sudo make clean spypiggy@XavierNX:/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo$ sudo make spypiggy@XavierNX:/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo$ ls -al total 1912 drwxr-xr-x 2 root root 4096 Aug 16 21:21 . drwxr-xr-x 3 root root 4096 Aug 6 08:12 .. -rw-r--r-- 1 root root 3373 Jul 27 04:19 kernels.cu -rw-r--r-- 1 root root 16760 Aug 16 11:16 kernels.o -rwxr-xr-x 1 root root 786176 Aug 16 21:17 libnvdsinfer_custom_impl_Yolo.so -rw-r--r-- 1 root root 2319 Jul 27 04:19 Makefile -rw-r--r-- 1 root root 4101 Jul 27 04:19 nvdsinfer_yolo_engine.cpp -rw-r--r-- 1 root root 14600 Aug 16 11:16 nvdsinfer_yolo_engine.o -rw-r--r-- 1 root root 20867 Aug 16 21:16 nvdsparsebbox_Yolo.cpp -rw-r--r-- 1 root root 270344 Aug 16 21:17 nvdsparsebbox_Yolo.o -rw-r--r-- 1 root root 16571 Jul 27 04:19 trt_utils.cpp -rw-r--r-- 1 root root 3449 Jul 27 04:19 trt_utils.h -rw-r--r-- 1 root root 208176 Aug 16 11:16 trt_utils.o -rw-r--r-- 1 root root 20099 Jul 27 04:19 yolo.cpp -rw-r--r-- 1 root root 3242 Jul 27 04:19 yolo.h -rw-r--r-- 1 root root 498632 Aug 16 11:16 yolo.o -rw-r--r-- 1 root root 3961 Jul 27 04:19 yoloPlugins.cpp -rw-r--r-- 1 root root 5345 Jul 27 04:19 yoloPlugins.h -rw-r--r-- 1 root root 38024 Aug 16 11:16 yoloPlugins.o

If the libnvdsinfer_custom_impl_Yolo.so file is newly created, it is a success. This file will later be used critically in the pipeline of DeepStream 5.0.

Configuration Files

/home/spypiggy/src/pytorch-YOLOV4/DeepStream

You can find two configuration files in the directory. It is necessary to modify the path of the model file in this file.

spypiggy@XavierNX:~/src/pytorch-YOLOv4/DeepStream$ pwd /home/spypiggy/src/pytorch-YOLOv4/DeepStream spypiggy@XavierNX:~/src/pytorch-YOLOv4/DeepStream$ ls -al total 28 drwxrwxr-x 3 spypiggy spypiggy 4096 Aug 16 11:42 . drwxrwxr-x 7 spypiggy spypiggy 4096 Aug 16 22:49 .. -rw-rw-r-- 1 spypiggy spypiggy 3680 Aug 16 21:25 config_infer_primary_yoloV4.txt -rw-rw-r-- 1 spypiggy spypiggy 4095 Aug 16 21:48 deepstream_app_config_yoloV4.txt -rw-rw-r-- 1 spypiggy spypiggy 621 Aug 16 10:44 labels.txt drwxrwxr-x 2 spypiggy spypiggy 4096 Aug 16 22:50 nvdsinfer_custom_impl_Yolo -rw-rw-r-- 1 spypiggy spypiggy 504 Aug 16 10:44 Readme.md

The following setup files have been routed to suit my environment. If your installation path is different, please correct it accordingly. I used sink0, sink1 in the dipstream_app_config_yoloV4.txt file. sink0 is for screen output, sink1 is for file output.

[property] gpu-id=0 net-scale-factor=0.0039215697906911373 #0=RGB, 1=BGR model-color-format=0 #custom-network-config=/home/spypiggy/src/pytorch-YOLOv4/cfg/yolov4.cfg # model-file=yolov3-tiny.weights model-engine-file=/home/spypiggy/src/pytorch-YOLOv4/yolov4_1_3_608_608_fp16.engine labelfile-path=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/labels.txt ## 0=FP32, 1=INT8, 2=FP16 mode network-mode=2 num-detected-classes=80 gie-unique-id=1 network-type=0 #is-classifier=0 ## 0=Group Rectangles, 1=DBSCAN, 2=NMS, 3= DBSCAN+NMS Hybrid, 4 = None(No clustering) cluster-mode=4 maintain-aspect-ratio=1 parse-bbox-func-name=NvDsInferParseCustomYoloV4 custom-lib-path=/opt/nvidia/deepstream/deepstream-5.0/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so engine-create-func-name=NvDsInferYoloCudaEngineGet #scaling-filter=0 #scaling-compute-hw=0 [class-attrs-all] nms-iou-threshold=0.6 pre-cluster-threshold=0.4

<config_infer_primary_yoloV4.txt>

[application] enable-perf-measurement=1 perf-measurement-interval-sec=5 #gie-kitti-output-dir=streamscl [tiled-display] enable=0 rows=1 columns=1 width=1280 height=720 gpu-id=0 #(0): nvbuf-mem-default - Default memory allocated, specific to particular platform #(1): nvbuf-mem-cuda-pinned - Allocate Pinned/Host cuda memory, applicable for Tesla #(2): nvbuf-mem-cuda-device - Allocate Device cuda memory, applicable for Tesla #(3): nvbuf-mem-cuda-unified - Allocate Unified cuda memory, applicable for Tesla #(4): nvbuf-mem-surface-array - Allocate Surface Array memory, applicable for Jetson nvbuf-memory-type=0 [source0] enable=1 #Type - 1=CameraV4L2 2=URI 3=MultiURI type=3 uri=file:/opt/nvidia/deepstream/deepstream-5.0/samples/streams/sample_1080p_h264.mp4 #uri=file:/opt/nvidia/deepstream/deepstream-5.0/samples/streams/sample_720p.h264 num-sources=1 gpu-id=0 # (0): memtype_device - Memory type Device # (1): memtype_pinned - Memory type Host Pinned # (2): memtype_unified - Memory type Unified cudadec-memtype=0 #For Screen Output [sink0] enable=1 #Type - 1=FakeSink 2=EglSink 3=File type=2 sync=0 source-id=0 gpu-id=0 nvbuf-memory-type=0 #For File Output [sink1] enable=1 #Type - 1=FakeSink 2=EglSink 3=File type=3 sync=0 source-id=0 gpu-id=0 nvbuf-memory-type=0 #1=mp4 2=mkv container=1 #1=h264 2=h265 codec=1 output-file=yolov4.mp4 [osd] enable=1 gpu-id=0 border-width=1 text-size=12 text-color=1;1;1;1; text-bg-color=0.3;0.3;0.3;1 font=Serif show-clock=0 clock-x-offset=800 clock-y-offset=820 clock-text-size=12 clock-color=1;0;0;0 nvbuf-memory-type=0 [streammux] gpu-id=0 ##Boolean property to inform muxer that sources are live live-source=0 batch-size=1 ##time out in usec, to wait after the first buffer is available ##to push the batch even if the complete batch is not formed batched-push-timeout=40000 ## Set muxer output width and height width=1280 height=720 ##Enable to maintain aspect ratio wrt source, and allow black borders, works ##along with width, height properties enable-padding=0 nvbuf-memory-type=0 # config-file property is mandatory for any gie section. # Other properties are optional and if set will override the properties set in # the infer config file. [primary-gie] enable=1 gpu-id=0 model-engine-file=/home/spypiggy/src/pytorch-YOLOv4/yolov4_1_3_608_608_fp16.engine labelfile-path=labels.txt #batch-size=1 #Required by the app for OSD, not a plugin property bbox-border-color0=1;0;0;1 bbox-border-color1=0;1;1;1 bbox-border-color2=0;0;1;1 bbox-border-color3=0;1;0;1 interval=0 gie-unique-id=1 nvbuf-memory-type=0 config-file=/home/spypiggy/src/pytorch-YOLOv4/DeepStream/config_infer_primary_yoloV4.txt [tracker] enable=0 tracker-width=512 tracker-height=320 ll-lib-file=/opt/nvidia/deepstream/deepstream-5.0/lib/libnvds_mot_klt.so [tests] file-loop=0

<deepstream_app_config_yoloV4.txt>

Test YOLOV4 in DeepStream

All the preparations for testing YOLOV4 in DeepStream are complete. You can now test it to work properly with the dipstream-app command.

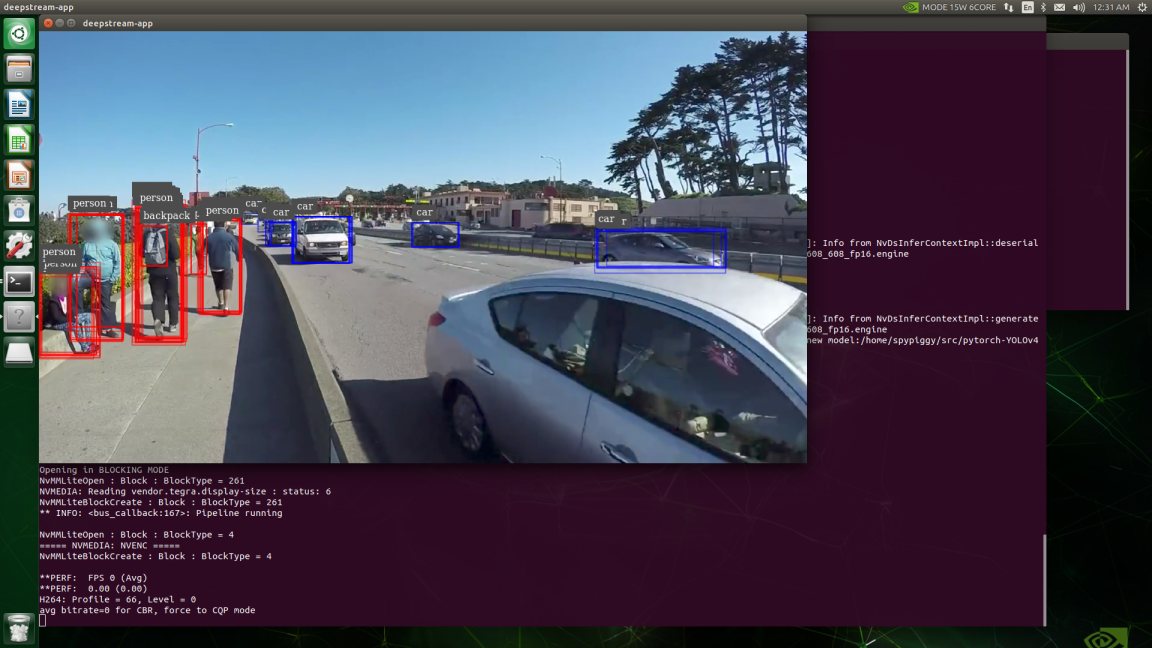

Run the YOLOv4 in DeepStream 5.0.

spypiggy@XavierNX:~/src/pytorch-YOLOv4$ deepstream-app -c ./Deepstream/deepStream_app_config_yoloV4.txt

<result screen>

I can see that it works properly. And when the program ends, you will see that the yolov4.mp4 file is also created.

Tips : If you look at the picture above, duplicate recognition problems occur. This problem can be solved by properly raising the threshold values in the configuration file. Threshold values should be determined by testing, but it is easy to determine between 0.6 and 0.9.

[class-attrs-all] nms-iou-threshold=0.7 pre-cluster-threshold=0.7

Applying YOLOV4 tiny model in DeepStream

After changing the YOLOV4 DarkNet model to the ONNX model, work on the tiny model as the sequence in which the YOLOV4 DarkNet model was changed back to the TensorRT model.

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ wget https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-tiny.weights

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ python3 demo_darknet2onnx.py ./cfg/yolov4-tiny.cfg yolov4-tiny.weights ./data/giraffe.jpg 1

The above command will probably generate the file yolov4_1_3_416_416_static.onnx. Now convert yolov4_1_3_416_416_static.onnx file to TensorRT model.

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ /usr/src/tensorrt/bin/trtexec --onnx=yolov4_1_3_416_416_static.onnx --explicitBatch --saveEngine=yolov4_1_3_416_416_fp16.engine --workspace=4096 --fp16

The above command will probably generate the file yolov4_1_3_416_416_static.engine.

Then create confguration file for tiny model. The python programs make their own pipelines. Therefore, the previously used dipstream_app_config_yoloV4.txt file is no longer required.

[property] gpu-id=0 net-scale-factor=0.0039215697906911373 #0=RGB, 1=BGR model-color-format=0 #custom-network-config=/home/spypiggy/src/pytorch-YOLOv4/cfg/yolov4-tiny.cfg # model-file=yolov3-tiny.weights model-engine-file=/home/spypiggy/src/pytorch-YOLOv4/yolov4_1_3_416_416_fp16.engine labelfile-path=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/labels.txt ## 0=FP32, 1=INT8, 2=FP16 mode network-mode=2 num-detected-classes=80 gie-unique-id=1 network-type=0 #is-classifier=0 ## 0=Group Rectangles, 1=DBSCAN, 2=NMS, 3= DBSCAN+NMS Hybrid, 4 = None(No clustering) cluster-mode=4 maintain-aspect-ratio=1 parse-bbox-func-name=NvDsInferParseCustomYoloV4 custom-lib-path=/opt/nvidia/deepstream/deepstream-5.0/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so engine-create-func-name=NvDsInferYoloCudaEngineGet #scaling-filter=0 #scaling-compute-hw=0 [class-attrs-all] nms-iou-threshold=0.6 pre-cluster-threshold=0.4

<config_infer_primary_yoloV4_tiny.txt>

Run the YOLOv4-tiny in DeepStream 5.0.

spypiggy@XavierNX:~/src/pytorch-YOLOv4$ deepstream-app -c ./Deepstream/deepStream_app_config_yoloV4_tiny.txt

You will feel much faster processing speed than YOLOV4. Instead, the accuracy is slightly lower than that of YOLOv4.

DeepStream YOLOv4 Python implementation

Earlier I tested YOLOV4 using 'deepstream-app' program provided by DeepStream. But my ultimate goal is to implement YOLOV4 in the Python program. And as in previous examples, the goal is to implement the perceived outcome using the probe function.

In the previous blog Xavier NX-DeepStream 5.0 #1-Installation, if you look at the directory of deepstream_python_apps that we installed, there is deepstream_python_apps/apps/common. You need to copy this directory or add the deepstream_python_apps/apps/common directory to your path in your Python code using the sys.path.append() function. The example introduced below is a modified example of /home/spypiggy/src/deepstream_python_apps/apps/deepstream-test1/deepstream_test_1.py.

#!/usr/bin/env python3 ################################################################################ # Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved. # # Permission is hereby granted, free of charge, to any person obtaining a # copy of this software and associated documentation files (the "Software"), # to deal in the Software without restriction, including without limitation # the rights to use, copy, modify, merge, publish, distribute, sublicense, # and/or sell copies of the Software, and to permit persons to whom the # Software is furnished to do so, subject to the following conditions: # # The above copyright notice and this permission notice shall be included in # all copies or substantial portions of the Software. # # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL # THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER # DEALINGS IN THE SOFTWARE. ################################################################################ import sys, time #To use common functions, you should add this path. sys.path.append('/home/spypiggy/src/deepstream_python_apps/apps') import gi gi.require_version('Gst', '1.0') from gi.repository import GObject, Gst from common.is_aarch_64 import is_aarch64 from common.bus_call import bus_call import pyds start = time.time() def osd_sink_pad_buffer_probe(pad,info,u_data): global start frame_number=0 num_rects=0 gst_buffer = info.get_buffer() if not gst_buffer: print("Unable to get GstBuffer ") return batch_meta = pyds.gst_buffer_get_nvds_batch_meta(hash(gst_buffer)) l_frame = batch_meta.frame_meta_list while l_frame is not None: now = time.time() try: frame_meta = pyds.NvDsFrameMeta.cast(l_frame.data) except StopIteration: break frame_number=frame_meta.frame_num num_rects = frame_meta.num_obj_meta l_obj=frame_meta.obj_meta_list while l_obj is not None: try: obj_meta=pyds.NvDsObjectMeta.cast(l_obj.data) print('class_id={}'.format(obj_meta.class_id)) print('object_id={}'.format(obj_meta.object_id)) print('obj_label={}'.format(obj_meta.obj_label)) print(' rect_params height={}'.format(obj_meta.rect_params.height)) print(' rect_params left={}'.format(obj_meta.rect_params.left)) print(' rect_params top={}'.format(obj_meta.rect_params.top)) print(' rect_params width={}'.format(obj_meta.rect_params.width)) except StopIteration: break obj_meta.rect_params.border_color.set(0.0, 1.0, 1.0, 0.0) # It seems that only the alpha channel is not working. (red, green, blue , alpha) try: l_obj=l_obj.next except StopIteration: break display_meta=pyds.nvds_acquire_display_meta_from_pool(batch_meta) display_meta.num_labels = 1 py_nvosd_text_params = display_meta.text_params[0] py_nvosd_text_params.display_text = "Frame Number={} Number of Objects={} FPS={}".format(frame_number, num_rects, (1 / (now - start))) py_nvosd_text_params.x_offset = 10 py_nvosd_text_params.y_offset = 12 py_nvosd_text_params.font_params.font_name = "/usr/share/fonts/truetype/dejavu/DejaVuSans-Bold.ttf" py_nvosd_text_params.font_params.font_size = 20 py_nvosd_text_params.font_params.font_color.set(0.2, 0.2, 1.0, 1) # (red, green, blue , alpha) py_nvosd_text_params.set_bg_clr = 1 py_nvosd_text_params.text_bg_clr.set(0.2, 0.2, 0.2, 0.3) pyds.nvds_add_display_meta_to_frame(frame_meta, display_meta) try: l_frame=l_frame.next except StopIteration: break start = now return Gst.PadProbeReturn.OK #DROP, HANDLED, OK, PASS, REMOVE def main(args): # Check input arguments if len(args) != 2: sys.stderr.write("usage: %s <media file or uri>\n" % args[0]) sys.exit(1) GObject.threads_init() Gst.init(None) print("Creating Pipeline \n ") pipeline = Gst.Pipeline() if not pipeline: sys.stderr.write(" Unable to create Pipeline \n") source = Gst.ElementFactory.make("filesrc", "file-source") if not source: sys.stderr.write(" Unable to create Source \n") h264parser = Gst.ElementFactory.make("h264parse", "h264-parser") if not h264parser: sys.stderr.write(" Unable to create h264 parser \n") decoder = Gst.ElementFactory.make("nvv4l2decoder", "nvv4l2-decoder") if not decoder: sys.stderr.write(" Unable to create Nvv4l2 Decoder \n") streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer") if not streammux: sys.stderr.write(" Unable to create NvStreamMux \n") pgie = Gst.ElementFactory.make("nvinfer", "primary-inference") if not pgie: sys.stderr.write(" Unable to create pgie \n") nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor") if not nvvidconv: sys.stderr.write(" Unable to create nvvidconv \n") nvosd = Gst.ElementFactory.make("nvdsosd", "onscreendisplay") if not nvosd: sys.stderr.write(" Unable to create nvosd \n") if is_aarch64(): transform = Gst.ElementFactory.make("nvegltransform", "nvegl-transform") sink = Gst.ElementFactory.make("nveglglessink", "nvvideo-renderer") if not sink: sys.stderr.write(" Unable to create egl sink \n") source.set_property('location', args[1]) streammux.set_property('width', 1920) streammux.set_property('height', 1080) streammux.set_property('batch-size', 1) streammux.set_property('batched-push-timeout', 4000000) pgie.set_property('config-file-path', "DeepStream/config_infer_primary_yoloV4_tiny.txt") pipeline.add(source) pipeline.add(h264parser) pipeline.add(decoder) pipeline.add(streammux) pipeline.add(pgie) pipeline.add(nvvidconv) pipeline.add(nvosd) pipeline.add(sink) if is_aarch64(): pipeline.add(transform) print("Linking elements in the Pipeline \n") source.link(h264parser) h264parser.link(decoder) sinkpad = streammux.get_request_pad("sink_0") if not sinkpad: sys.stderr.write(" Unable to get the sink pad of streammux \n") srcpad = decoder.get_static_pad("src") if not srcpad: sys.stderr.write(" Unable to get source pad of decoder \n") srcpad.link(sinkpad) streammux.link(pgie) pgie.link(nvvidconv) nvvidconv.link(nvosd) if is_aarch64(): nvosd.link(transform) transform.link(sink) else: nvosd.link(sink) loop = GObject.MainLoop() bus = pipeline.get_bus() bus.add_signal_watch() bus.connect ("message", bus_call, loop) osdsinkpad = nvosd.get_static_pad("sink") if not osdsinkpad: sys.stderr.write(" Unable to get sink pad of nvosd \n") osdsinkpad.add_probe(Gst.PadProbeType.BUFFER, osd_sink_pad_buffer_probe, 0) pipeline.set_state(Gst.State.PLAYING) try: loop.run() except: pass # cleanup pipeline.set_state(Gst.State.NULL) if __name__ == '__main__': sys.exit(main(sys.argv))

<demo_yolo.py>

If you run the code:

(python) spypiggy@XavierNX:~/src/pytorch-YOLOv4$ python demo_yolo.py /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.h264 ...... ...... class_id=2 object_id=18446744073709551615 obj_label=car rect_params height=29.0625 rect_params left=553.125 rect_params top=471.5625 rect_params width=33.75 class_id=0 object_id=18446744073709551615 obj_label=person rect_params height=62.34375 rect_params left=446.953125 rect_params top=488.4375 rect_params width=23.4375 class_id=0 object_id=18446744073709551615 obj_label=person rect_params height=70.078125 rect_params left=413.90625 rect_params top=478.828125 rect_params width=20.15625 class_id=0 object_id=18446744073709551615 obj_label=person rect_params height=63.75 rect_params left=427.5 rect_params top=486.5625 rect_params width=24.375 class_id=2 object_id=18446744073709551615 obj_label=car rect_params height=107.0877914428711 rect_params left=634.0028686523438 rect_params top=477.3771667480469 rect_params width=134.88897705078125 ...... ......

class_id is the value of label.txt. Therefore, it is noted that it is different from the class_id used by the primary detector resnet10.caffemodel tested in the previous blog. While resnet10.caffemodel recognizes 4 classes, YOLO recognizes 80 classes.

<yolov4 result>

At first glance, it seems to work well without any problems. However, the output box coordinate values including the class_id value in the probe function are not updated at all. For this reason, I also excluded the object statistics from the upper text display.

Under the Hood

If you intend to use the YOLOv3 model, do it in the following order:

Download the YOLOv3 models

I will use the previous /home/spypiggy/src/pyritorch-YOLOV4 as the working directory.

Download the weight file and cfg file for YOLOv3.

spypiggy@XavierNX:~/src/pytorch-YOLOv4$ wget https://pjreddie.com/media/files/yolov3.weights spypiggy@XavierNX:~/src/pytorch-YOLOv4$ wget https://pjreddie.com/media/files/yolov3-tiny.weights spypiggy@XavierNX:~/src/pytorch-YOLOv4$ cd cfg spypiggy@XavierNX:~/src/pytorch-YOLOv4/cfg$ wget https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3.cfg spypiggy@XavierNX:~/src/pytorch-YOLOv4/cfg$ wget https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-tiny.cfg

Prepare the configuration files

Copy the config file from /opt/nvidia/deepstream/deepstream-5.0/source/objectDetector_Yolo directory to your working directory. If you use Python to construct a pipeline, you don't need the file dipstream_app_config_XXX.txt. Copy only config_infer_primary_yoloV3.txt, config_infer_primary_tyoloV3_txt file. Correct the path name of the weight and cfg files, and the label files.These are my configuration files.

[property] gpu-id=0 net-scale-factor=0.0039215697906911373 #0=RGB, 1=BGR model-color-format=0 custom-network-config=/home/spypiggy/src/pytorch-YOLOv4/cfg/yolov3.cfg model-file=/home/spypiggy/src/pytorch-YOLOv4/yolov3.weights #model-engine-file=yolov3_b1_gpu0_int8.engine labelfile-path=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/labels.txt int8-calib-file=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/yolov3-calibration.table.trt7.0 ## 0=FP32, 1=INT8, 2=FP16 mode network-mode=0 num-detected-classes=80 gie-unique-id=1 # 0:Detector, 1:Classifier, 2:Segmentation network-type=0 #is-classifier=0 ## 0=Group Rectangles, 1=DBSCAN, 2=NMS, 3= DBSCAN+NMS Hybrid, 4 = None(No clustering) cluster-mode=2 maintain-aspect-ratio=1 parse-bbox-func-name=NvDsInferParseCustomYoloV3 custom-lib-path=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so engine-create-func-name=NvDsInferYoloCudaEngineGet #scaling-filter=0 #scaling-compute-hw=0 [class-attrs-all] nms-iou-threshold=0.7 threshold=0.7

[property] gpu-id=0 net-scale-factor=0.0039215697906911373 #0=RGB, 1=BGR model-color-format=0 custom-network-config=/home/spypiggy/src/pytorch-YOLOv4/cfg/yolov3-tiny.cfg model-file=/home/spypiggy/src/pytorch-YOLOv4/yolov3-tiny.weights #model-engine-file=yolov3-tiny_b1_gpu0_fp32.engine labelfile-path=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/labels.txt ## 0=FP32, 1=INT8, 2=FP16 mode network-mode=0 num-detected-classes=80 gie-unique-id=1 # 0:Detector, 1:Classifier, 2:Segmentation network-type=0 #is-classifier=0 ## 0=Group Rectangles, 1=DBSCAN, 2=NMS, 3= DBSCAN+NMS Hybrid, 4 = None(No clustering) cluster-mode=2 maintain-aspect-ratio=1 parse-bbox-func-name=NvDsInferParseCustomYoloV3Tiny custom-lib-path=/opt/nvidia/deepstream/deepstream/sources/objectDetector_Yolo/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so engine-create-func-name=NvDsInferYoloCudaEngineGet #scaling-filter=0 #scaling-compute-hw=0 [class-attrs-all] nms-iou-threshold=0.7 threshold=0.7

Now, in the demo_yolo.py Python code, you can replace the configuration file of the nvinfer element with the desired YOLOv3 file. In YOLOv3, the model file was used without converting to the ONNX -> TensorRT model. However, DeepStream internally undergoes a tensorRT conversion process. Because of this process, the initial loading time is a little longer. When you run YOLOV3, there is no significant difference in execution speed and accuracy compared to YOLOV4.

Wrapping UP

It is recommended to use YOLOv3, which is officially supported by DeepStream 5.0. I know that NVidia will support YOLOv4 in the future.

댓글 없음:

댓글 쓰기