Prerequisites

I use python virtual environment in Xavier NX. Also, Tensorflow and PyTorch, which are frequently used frameworks, have been installed in the virtual environment in advance. Please proceed with the above process in advance and then follow the instructions below. And I installed DeepStream 5.0 and Python examples from my previous blog.

- Jetson Xavier NX - JetPack 4.4(production release) headless setup

- Jetson Xavier NX - Python virtual environment and ML platforms(tensorflow, Pytorch) installation

- Xavier NX-DeepStream 5.0 #1 - Installation

- Xavier NX-DeepStream 5.0 #2 - Run Python Samples(test1)

In my previous blog, I explained an example of finding vehicles, bicycles, people, and roadsigns by analyzing streams from video or webcams in real time using DeepStream 5.0 and Python. This example will look at the second example apps/deepstream-test2. This example uses the same model as the previous blog.

Example 2

The second example code is in deepstream_python_apps/apps/ deepstream-test2.

(python) spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test2$ ls -al total 64 drwxrwxr-x 2 spypiggy spypiggy 4096 Aug 9 09:32 . drwxrwxr-x 11 spypiggy spypiggy 4096 Aug 6 06:57 .. -rw-rw-r-- 1 spypiggy spypiggy 12296 Aug 9 09:32 deepstream_test_2.py -rw-rw-r-- 1 spypiggy spypiggy 3841 Aug 9 09:16 dstest2_pgie_config.txt -rw-rw-r-- 1 spypiggy spypiggy 4215 Aug 9 09:17 dstest2_sgie1_config.txt -rw-rw-r-- 1 spypiggy spypiggy 4202 Aug 9 09:18 dstest2_sgie2_config.txt -rw-rw-r-- 1 spypiggy spypiggy 4262 Aug 9 09:19 dstest2_sgie3_config.txt -rw-rw-r-- 1 spypiggy spypiggy 1787 Aug 6 06:57 dstest2_tracker_config.txt -rw-rw-r-- 1 spypiggy spypiggy 3292 Aug 6 06:57 README -rw-rw-r-- 1 spypiggy spypiggy 3236 Aug 6 06:57 tracker_config.yml

PipeLine

PipeLined of GStreamer Elements used in example deepstream_test_2.py is as follows.

Elements in the area indicated by the blue dotted line are newly added ones. The connection of elements is quite intuitive and easy to understand. The elements to be looked at carefully are as follows.

- nvtracker : This plugin tracks detected objects and gives each new object a unique ID. When you run the example after a while, objects recognized by DeepStream are assigned numbers. This number is retained even if the frame changes. That is, a tracking function considering the movement of objects is implemented. However, for the same object, if a frame that fails to recognize is displayed during frame progress, a new number is assigned. In other words, it is judged as a new object. The resnet18.caffemodel model used in DeepStream 5.0 has excellent speed, but its accuracy is relatively inferior to the model based on ResNet-50. Therefore, it often happens that the tracking number of an object changes during tracking.

And the newly added configuration files that the nvinfer element will use are as follows. These files contain various information such as the model and label values that the nvinfer element will use.

- dstest2_sgie1.config.txt : This configuration file contains information about the model that identifies the color of the car. If you look at the label.txt file in the /opt/nvidia/deepstream/deepstream/samples/models/Secondary_CarColor directory, the colors those this model can recognize are as follows. black, blue, brown, gold, green, gray, maroon, orange, red, silver, white, yellow

- dstest2_sgie2.config.txt : This configuration file contains information about the model that identifies the maker of the car. If you look at the label.txt file in the /opt/nvidia/deepstream/deepstream/samples/models/Secondary_CarMaker directory, the makers those this model can recognize are as follows. acura, audi, bmw, chevrolet, chrysler, dodge, ford, gmc, honda, hyundai, infiniti, jeep, kia, lexus, mazda, mercedes, nissan, subaru, toyota, volkswagen

- dstest2_sgie3.config.txt : This configuration file contains

information about the model that identifies the vehicle types. If you

look at the label.txt file in the

/opt/nvidia/deepstream/deepstream/samples/models/Secondary_VehicleTypes

directory, the vehicle types those this model can recognize are as follows. coupe, largevehicle, sedan, suv, truck, van

Therefore, the above pipeline is an example of searching for 4 objects(vehicle, bicycle, person, roadsign) in a frame of a video stream and then finding the car color, car maker, and car type for the car. In addition, a function to track found objects by assigning a unique ID is added.

Configuration

[property] gpu-id=0 net-scale-factor=0.0039215697906911373 model-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/resnet10.caffemodel #model-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel proto-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/resnet10.prototxt #proto-file=../../../../samples/models/Primary_Detector/resnet10.prototxt #model-engine-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_int8.engine #model-engine-file=../../../../samples/models/Primary_Detector/resnet10.caffemodel_b1_gpu0_int8.engine labelfile-path=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/labels.txt #labelfile-path=../../../../samples/models/Primary_Detector/labels.txt int8-calib-file=/opt/nvidia/deepstream/deepstream/samples/models/Primary_Detector/cal_trt.bin #int8-calib-file=../../../../samples/models/Primary_Detector/cal_trt.bin

......

Be Careful : Do not put tabs or space characters at the end of the line.

Test

Now run the code.

spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test2$ source /home/spypiggy/python/bin/activate (python) spypiggy@XavierNX:~/src/deepstream_python_apps/apps/deepstream-test2$ python3 deepstream_test_2.py /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.h264

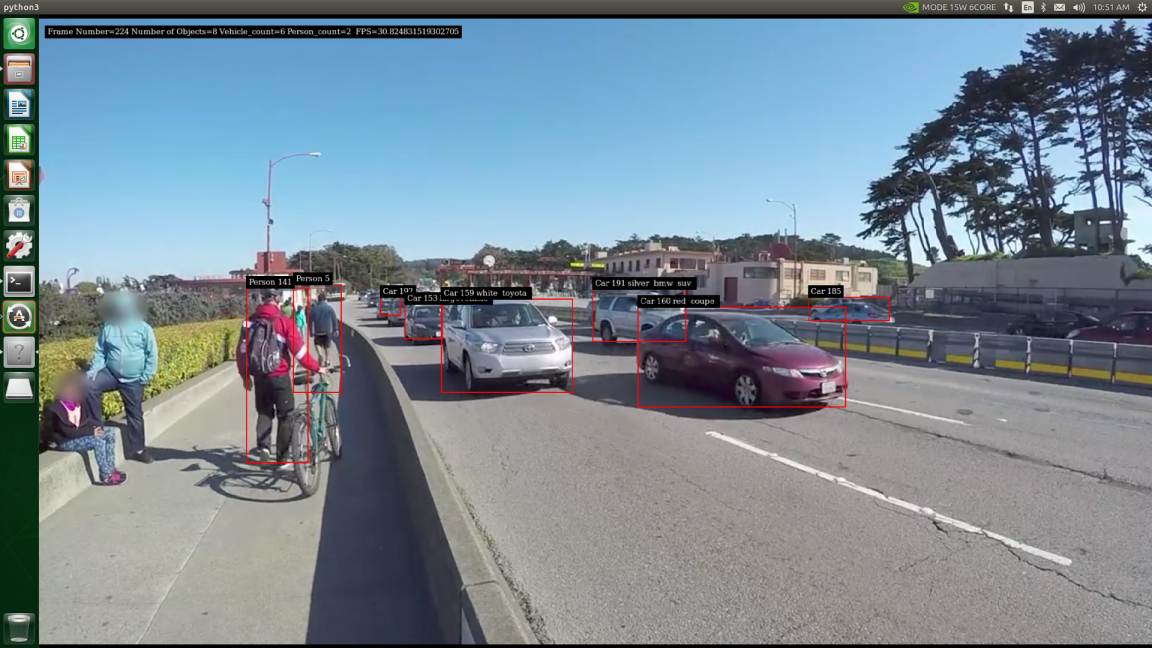

If you can see the following screen, it is running successfully.

It's not much different from the previous blog example. The first nvinfer element recognizes 4 types of objects and draws a box, so the overall shape is the same. However, the text describing the box is slightly different. In the previous blog test, only Person, Car, etc. were displayed, but this time, a number such as Person 141 is added. This number is a unique ID value that identifies the recognized object. And in the case of cars, it is marked as Car 159 white toyota, Car 160 red coupe, and car 191 silver bmw suv. The back part of the unique ID is the value of the car color, car maker, and car type recognized by the remaining nvinfer elements.

Under the Hood

Benefits of GStreamer PipeLine

Using GStreamer's pipeline, you can easily add, delete, and change the order of elements. So, if you are not interested in car makers, you can exclude them from the pipeline above.

Original code

sgie1.link(sgie2) sgie2.link(sgie3) sgie3.link(nvvidconv)

To

sgie1.link(sgie3) sgie3.link(nvvidconv)

Perhaps you will no longer be able to see car maker information.

And the sgie1, sgie2, sgie3 elements can work in any order. So even if you change the order of links in the pipeline, there is no problem at all. However, the order of the screen output text might change.

Be Careful : sgie1, sgie2, sgie3 elements should follow the nvtrack element.

Process_Mode

The dstest2_sgie1.config.txt, dstest2_sgie2.config.txt, and dstest2_sgie3.config.txt have one major difference compared to the dstest2_pgie.config.txt file. In the dstest2_pgie.config.txt file, 'process-mode=1' is defined, but 'process-mode=2' is set in these three files. The process-mode defines whether the nvinfer element will process a full frame image or only a part of the image.

If this value is 1, the full frame is used, and if it is 2, the clip object image is used. In the first nvinfer element that uses the dstest2_pgie.config.txt configuration file, this value is 1. Therefore, in the first nvinfer element, processing is performed using full frames. For the rest of the nvinfer elements, this value is 2. That is, the clip object image is used. The clip object used here is object information obtained from the first nvinfer element. Therefore, it is possible to process quickly without performing object recognition repeatedly. When you run the example, the reason there is no big difference between the first example and the execution speed is that the configuration is optimized to avoid unnecessary repetitions.

If this value is 1, the full frame is used, and if it is 2, the clip object image is used. In the first nvinfer element that uses the dstest2_pgie.config.txt configuration file, this value is 1. Therefore, in the first nvinfer element, processing is performed using full frames. For the rest of the nvinfer elements, this value is 2. That is, the clip object image is used. The clip object used here is object information obtained from the first nvinfer element. Therefore, it is possible to process quickly without performing object recognition repeatedly. When you run the example, the reason there is no big difference between the first example and the execution speed is that the configuration is optimized to avoid unnecessary repetitions.

Tips : You must use the model in the Primary_Detector directory as the first pipeline of nvinfer. And the models in the Secondary_CarColor, Secondary_CarMaker, and Secondary_VehicleType directories should be applied to the nvinfer element next.

Wrapping Up

So far, we have seen how to add a Seconrary model to the pipeline to try new recognition for an already recognized area.

Next time, I will look at how to find the location (box coordinates) of the recognition object, which is the data we actually need in the Probe function.

Next time, I will look at how to find the location (box coordinates) of the recognition object, which is the data we actually need in the Probe function.

댓글 없음:

댓글 쓰기